AI Generations: ChatGPT-3 vs ChatGPT-4 on Sol LeWitt’s Wall Drawings

About six weeks ago, I used ChatGPT to implement Sol LeWitt’s Wall Drawings. If that doesn’t mean anything to you, this article explains what’s going on and contains ChatGPT outputs compared to human drawings.

In that article, I used GPT-3, which was all that was available at the time. In this one, I’m trying out the newly released GPT-4.

Let’s compare and contrast.

Note: here are the GPT-4 p5js editor sketches and here are the GPT-3 sketches. The code for each is also linked under the images.

WALL DRAWING #118

“On a wall surface, any continuous stretch of wall, using a hard pencil, place fifty points at random. The points should be evenly distributed over the area of the wall. All of the points should be connected by straight lines.”

GPT-3

Wall Drawing #118 - GPT-3 output

GPT-4

Wall Drawing #118 - GPT-4 output

Okay, immediately we can see a number of improvements here. Well, I feel bad calling them improvements because I did enjoy GPT-3’s off-beat artistry but, let’s be honest.

First of all, GPT-4 interpreted the instructions in a more expected way. GPT-3 drew 50 points and then connected them to other random locations. In fairness, the instruction doesn’t actually specify implicitly what to connect them to, but most humans would connect the points to each other, which GPT-4 has done.

// GPT-3

function draw() {

background(255);

for (let i = 0; i < 50; i++) {

let x = random(0, width);

let y = random(0, height);

point(x, y);

for (let j = 0; j < i; j++) {

line(x, y, random(0, width), random(0, height));

}

}

}// GPT-4

const numPoints = 50;

let points = [];

function setup() {

createCanvas(800, 600);

for (let i = 0; i < numPoints; i++) {

points.push(createVector(random(width), random(height)));

}

}

function draw() {

background(240);

stroke(0);

strokeWeight(1);

// Draw the points

for (let i = 0; i < points.length; i++) {

ellipse(points[i].x, points[i].y, 4);

}

// Connect all points with lines

for (let i = 0; i < points.length; i++) {

for (let j = i + 1; j < points.length; j++) {

line(points[i].x, points[i].y, points[j].x, points[j].y);

}

}

noLoop(); // Stop the draw loop, as the image is static

}

In comparing the code, we can see that GPT-3 created a weird ‘for loop’ situation where each point had consecutively more lines than the last. Nothing in the instruction that says to do that but nothing says not to either, I guess. GPT-4 has no time for such nonsense, and its code is much more sensible.

GPT-4 added a grey background. An aesthetic choice which I hoped would endure throughout this experiment and spare our eyes from the stark black and white contrasts GPT-3 usually (but not always) produced. Spoiler alert: no such luck.

Wall drawing #11

“A wall divided horizontally and vertically into four equal parts. Within each part, three of the four kinds of lines are superimposed.”

In LeWitt’s vocabulary, the four kinds of lines are: horizontal, vertical, 45º diagonal right and 45º diagonal left. When I did this with GPT-3 I created two outputs: v1, where I did not provide this information and v2, where I did.

I didn’t give GPT-4 the info in this test. It decided of its own accord that a sensible interpretation of “four kinds of lines” is vertical, horizontal and the two diagonals.

GPT-3

Wall Drawing #11 - GPT-3 output v1

GPT-3

Wall Drawing #11 - GPT-3 output v2

GPT-4

Wall Drawing #11 - GPT-4 output v1

In GPT-3’s v2, it messed up the geometry, putting the line intended for the bottom right segment in the bottom left. It also ignored the instruction to put three kinds of lines in each segment.

GPT-4 fixes both those issues and implements the instruction pretty flawlessly.

One frequent disappointment that appears in both GPT-3 and 4 is that the line type choices are usually not random, so the results will be the same every time the code is run. In this output, GPT-4 actually did use random to choose between options for the direction of the diagonal line for each segment but in many outputs either the choice is hard coded (Chat-GPT chooses itself), or it cycles through various options using modulo. It doesn’t contravene the instructions, but it’s not how I’d code it.

WALL DRAWING #16

“Bands of lines 12 inches wide, in three directions (vertical, horizontal, diagonal right) intersecting.”

(I told ChatGPT 100px instead of 12inches)

GPT-3

Wall Drawing #16 - GPT-3 output

GPT-4

Wall Drawing #16 - GPT-4 output

Uh… okay. Bit of a swerve here. At first I thought it had made an elementary mistake like drawing black lines on a black background but, in fact, it has drawn a lot of lines next to each other, so the whole thing looks almost black.

If we look at the code and a brightened up version of the output, we can see what’s going on.

GPT-4

function draw() {

background(255);

// Draw vertical lines

for (let x = 0; x < width; x += 100) {

for (let y = 0; y < height; y += 1) {

line(x, y, x + 100, y);

}

}

// Draw horizontal lines

for (let x = 0; x < width; x += 1) {

for (let y = 0; y < height; y += 100) {

line(x, y, x, y + 100);

}

}

// Draw diagonal lines (right)

for (let x = 0; x < width; x += 100) {

for (let y = 0; y < height; y += 1) {

line(x + y, y, x + y + 1, y + 1);

}

}

noLoop();

}

function mouseClicked() {

redraw();

}GPT-4

Wall Drawing #16 - GPT-4 output brightened up

It’s drawn 100px wide bands of parallel lines and- the horizontal/vertical lines we see in the output are where the ends of these lines overlap by 1px.

With the diagonal lines, let’s generously assume it wanted to do something different, and not that it failed to figure out the geometry to do the same thing diagonally.

In fairness to GPT-4, GPT-3’s interpretation of this quite vague instruction was even less accurate… but, at least you could see it.

WALL DRAWING #19

“A wall divided vertically into six equal parts, with two of the four kinds of line directions superimposed in each part.”

(I told them both about the types of lines)

GPT-3

Wall Drawing #19 - GPT-3 output

GPT-4

Wall Drawing #19 - GPT-4 output v1

At first I thought this was completely off the wall but, actually, GPT-4 has sort of nailed it. I was expecting two lines per segment but it has chosen two types of line and then drawn lots of those lines. Kind of the opposite to the direction GPT-3 took, where it only ever drew one line if it thought it could get away with it.

I gave GPT-4 another couple of goes with the same instructions, for fun.

GPT-4

Wall Drawing #19 - GPT-4 output v2

GPT-4

Wall Drawing #19 - GPT-4 output v3

It’s cool how “divided vertically into six equal parts” was interpreted in two different ways because it’s ambiguous in the instruction whether the parts should sit vertically or the divisions should be vertical. Also, the v2 output is a banger, imo.

WALL DRAWING #46

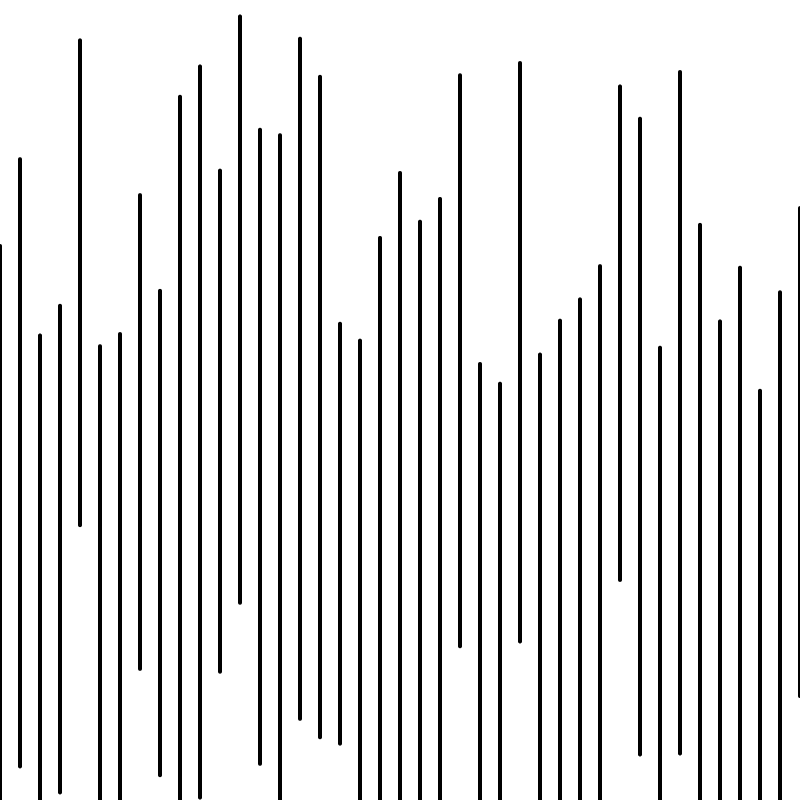

“Vertical lines, not straight, not touching, covering the wall evenly.”

GPT-3

Wall Drawing #46 - GPT-3 output

GPT-4

Wall Drawing #46 - GPT-4 output

This is a much more human interpretation of what “vertical lines, not straight” means, although it’s disappointing that GPT-4 used random() instead of Perlin noise.

As an extra experiment, I tried giving GPT-3 and GPT-4 the following instruction: “Write a p5js sketch that draws 10 wobbly lines”

GPT-3

10 wobbly lines - GPT-3 output

GPT-4

10 wobbly lines - GPT-3 output

It’s like GPT-4 is GPT-3’s older sibling.

In this extra experiment, GPT-4 did use Perlin noise - I’m guessing the word “wobbly” triggered that.

Neither of them seem to know you need to use noFill() when using the beginShape() function to draw a line, so at least generative artists should keep their jobs for about another 2 weeks - not withstanding the fact that it actually looks cool with the fill anyway.

WALL DRAWING #47

“A wall divided into fifteen equal parts, each with a different line direction, and all combinations.”

I provided the information about the four line directions.

GPT-3

Wall Drawing #47 - GPT-3 output

GPT-4

Wall Drawing #47 - GPT-4 output

Obviously GPT-4 had much more success at dividing the canvas into 15 segments, and it chose an appropriate canvas shape to do so. For a second I thought it had calculated the canvas so the diagonal lines are exactly 45º - but they’re not. That stipulation is something it has skirted throughout.

As per usual, it hardcoded the line choices for each segment and if you look at the code linked above, it’s absurdly verbose as a result. I gave it the same prompt with the simple instruction, “choose randomly” added, which worked brilliantly and produces different results on every reload.

Wall Drawing #47 - GPT-4 output v2

WALL DRAWING #51

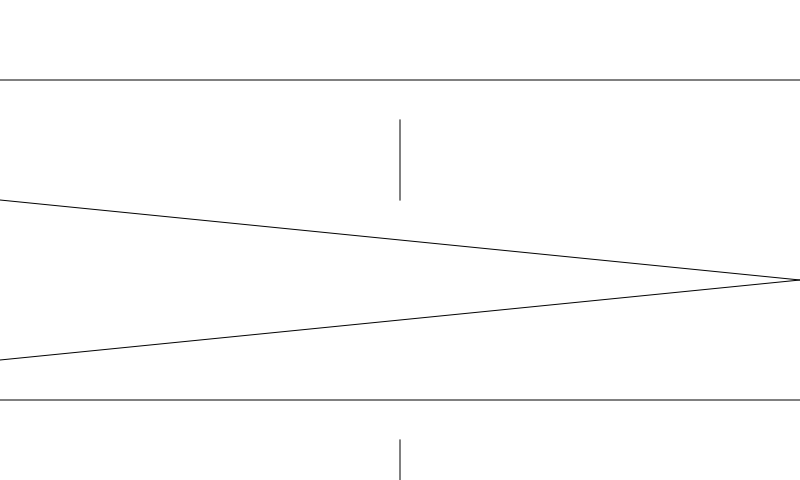

“All architectural points connected by straight lines.”

GPT-3

GPT-4

Giving ChatGPT this prompt is sort of a wild card because the intention is to incorporate architectural points of the wall it’s being drawn on. There’s not a huge functional difference here but the GPT-4 output does look cooler.

WALL DRAWING #154

“A black outlined square with a red horizontal line from the midpoint of the left side toward the middle of the right side.”

Human

Wall Drawing #154 - Human drawn

GPT-3

Wall Drawing #154 - GPT-3 output

GPT-4

Wall Drawing #154 - GPT-4 output

Siiiiiigh. I guess “toward” can mean “all the way to”, but I’m not happy about it.

WALL DRAWING #295

“Six white geometric figures (outlines) superimposed on a black wall.”

In LeWitt’s vocabulary, the six kinds of geometric shapes are: circle, square, triangle, rectangle, trapezoid and parallelogram.

GPT-3

Wall Drawing #295 - GPT-3 output

GPT-4

Wall Drawing #295 - GPT-4 output

GPT-3 outputs had something of a haphazard playfulness that seems to have been lost. However I think GPT-4’s output does look cooler, kind of like a hipster bar logo.

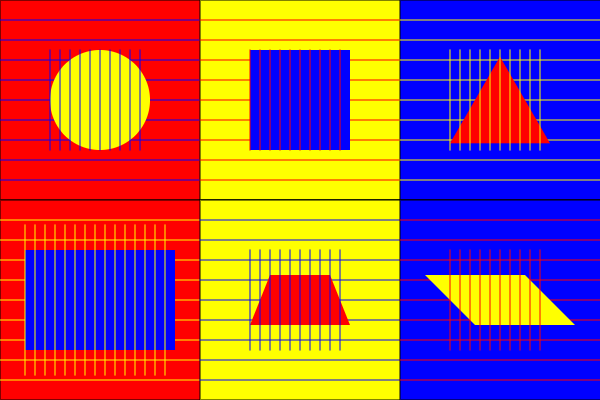

WALL DRAWING #340

“Six-part drawing. The wall is divided horizontally and vertically into six equal parts. 1st part: On red, blue horizontal parallel lines, and in the center, a circle within which are yellow vertical parallel lines; 2nd part: On yellow, red horizontal parallel lines, and in the center, a square within which are blue vertical parallel lines; 3rd part: On blue, yellow horizontal parallel lines, and in the center, a triangle within which are red vertical parallel lines; 4th part: On red, yellow horizontal parallel lines, and in the center, a rectangle within which are blue vertical parallel lines; 5th part: On yellow, blue horizontal parallel lines, and in the center, a trapezoid within which are red vertical parallel lines; 6th part: On blue, red horizontal parallel lines, and in the center, a parallelogram within which are yellow vertical parallel lines. The horizontal lines do not enter the figures.”

GPT-3

Wall Drawing #340 - GPT-3 output

GPT-4

Wall Drawing #340 - GPT-4 output

This is a real tragedy, because the five other parts of the drawing are actually there in GPT-4’s output. However, it used the background() function for the background colour of each segment, accidently filling the entire canvas and covering the earlier segments.

Additionally, GPT-4 chose function names that are already in use by p5js, such as circle() and rect().

I fixed these bits, so we can take a look at how it did otherwise. It hasn’t quite been able to follow the sentence structure of the instructions, but it’s another clear leap forward from GPT-3.

Human

GPT-4 + Human

WALL DRAWING #396

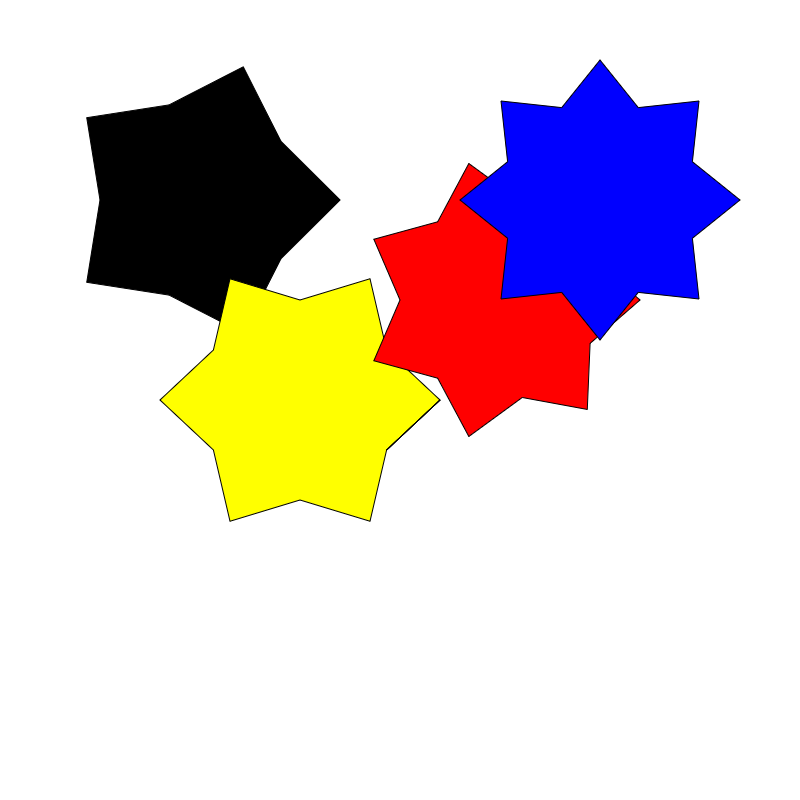

“A black five-pointed star, a yellow six-pointed star, a red seven-pointed star, and a blue eight-pointed star, drawn in color and India ink washes.”

GPT-3

Wall Drawing #396 - GPT-3 output

GPT-4

Wall Drawing #396 - GPT-4 output

Another case where GPT-4 makes more sense but we’ve lost the jaunty vibes from GPT-3.

The most interesting thing for me though, is that GPT-4’s intro to the code said this:

It seems like you want to create a p5.js sketch that draws stars with different numbers of points and colors. However, p5.js doesn't support India ink washes, as it's a digital drawing tool. Nevertheless, we can create the star shapes with the desired colors. Here's a p5.js sketch that achieves that:

This acknowledgement that there’s a part of the instruction it’s incapable of completing seems like a maturity upgrade. GPT-3 was routinely confidently wrong, could this indicate that GPT-4 has more of an awareness of its limitations?

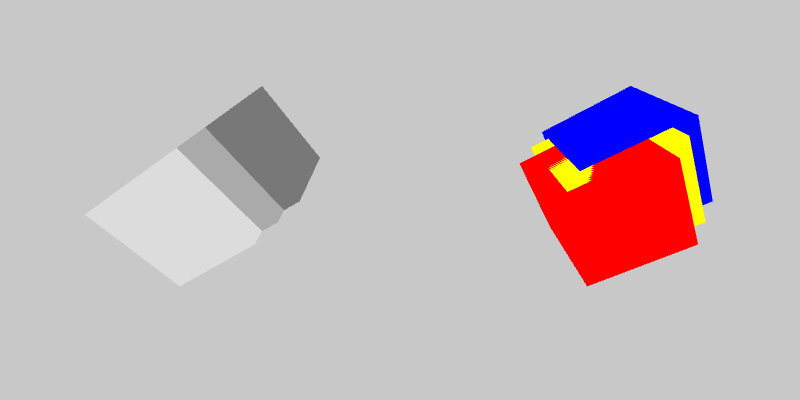

WALL DRAWING #415D

“Double Drawing. Right: Isometric Figure (Cube) with progressively darker graduations of gray on each of three planes; Left: Isometric figure with red, yellow, and blue superimposed progressively on each of the three planes. The background is gray.”

GPT-3

Wall Drawing #415D - GPT-3 output

GPT-4

Wall Drawing #415D - GPT-4 output

GPT-4 dipped into WEBGL mode here with a brave but ultimately unsuccessful effort.

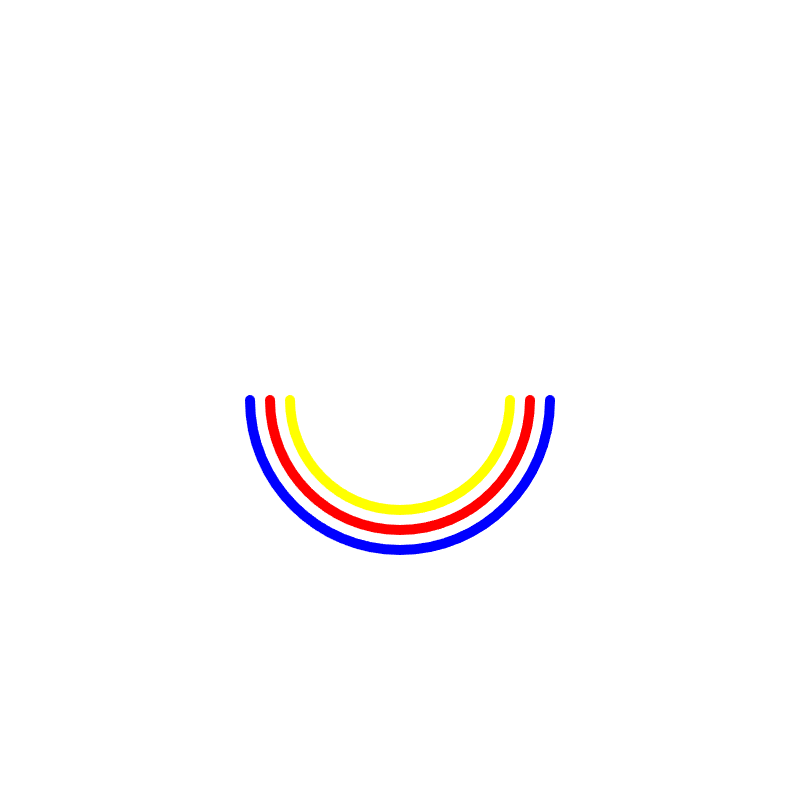

WALL DRAWING #579

“Three concentric arches. The outside one is blue; the middle red; and the inside one is yellow.”

GPT-3

Wall Drawing #579 - GPT-3 output

GPT-4

Wall Drawing #579 - GPT-4 output

Evermore we march towards our demise, as an artificially intelligent chat bot slowly figures out how a human would usually draw an “arch”.

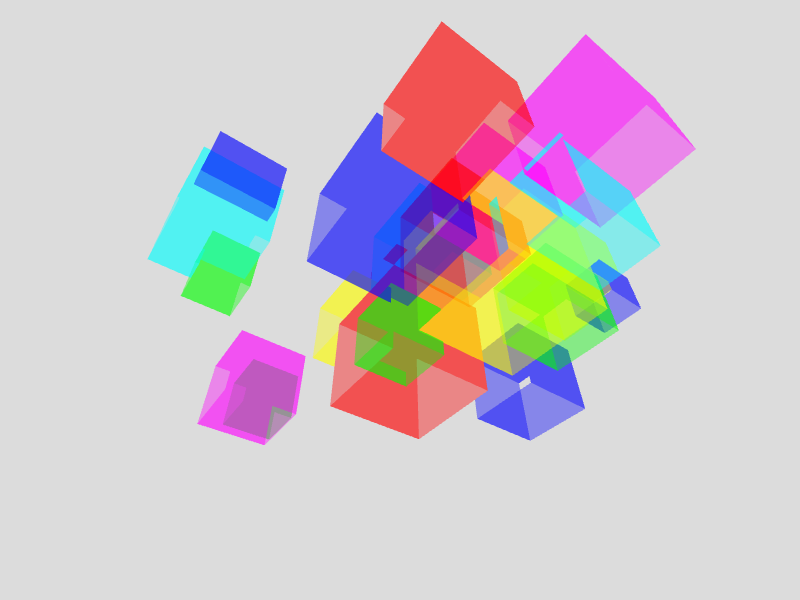

WALL DRAWING #766

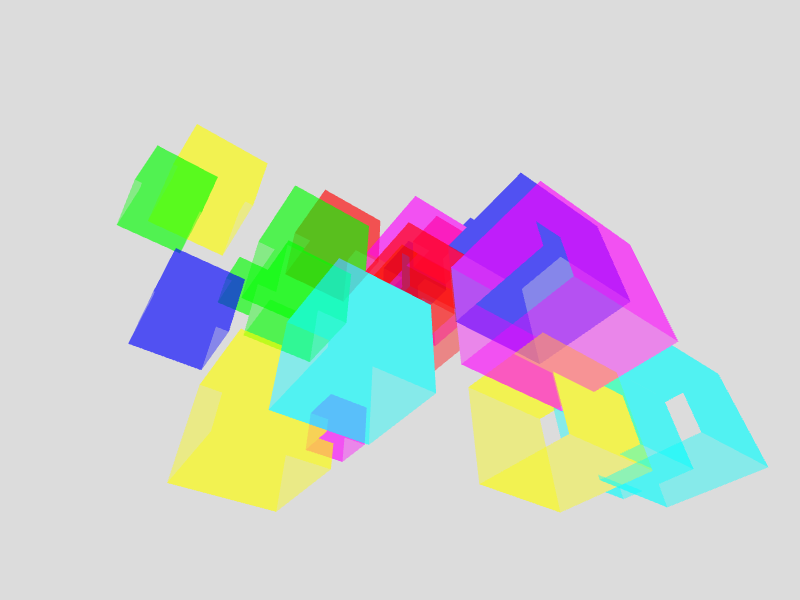

“Twenty-one isometric cubes of varying sizes, each with color ink washes superimposed.”

GPT-3

Wall Drawing #766 - GPT-3 output

GPT-4

Wall Drawing #766 - GPT-4 output

Weirdly, this time GPT-4 didn’t acknowledge it can’t do an “india wash”, sort of dunking on me for saying it can acknowledge its limitations.

Instead, it had a bash at it using translucency, which looks cool. It set the opacity for each colour to 100, so I was suspicious this had happened by chance and that it was thinking that means 100% when in fact it’s 100/255. I asked it though, and it said it did it to create “semi-transparent ink wash effect on the cubes” and confirmed it knew it was out of 255.

Also, this time it placed the cubes using random(), so we can output varied results.

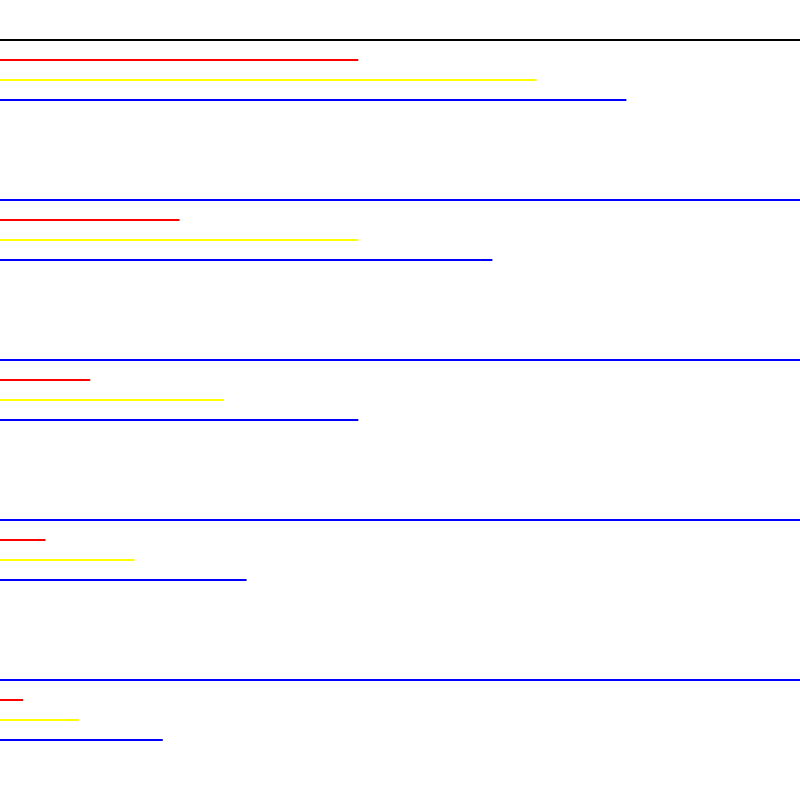

WALL DRAWING #797

“The first drafter has a black marker and makes an irregular horizontal line near the top of the wall. Then the second drafter tries to copy it (without touching it) using a red marker. The third drafter does the same, using a yellow marker. The fourth drafter does the same using a blue marker. Then the second drafter followed by the third and fourth copies the last line drawn until the bottom of the wall is reached.”

GPT-3

Wall Drawing #797 - GPT-3 output

GPT-4

Wall Drawing #797 - GPT-4 output

Bit of a mic drop moment from GPT-4 there.

When the sketch first loads, we only see the first line. Each additional line is added with a mouse click, which feels like the viewer is being incorporated as version of the “drafters” in the prompt.

There are a couple of technical issues, one being the lack of noFill(), which I fixed. Additionally, all the lines are drawn on top of themselves every frame, which creates a blocky appearance.

WALL DRAWING #901

“Color bands and black blob. The wall is divided vertically into six equal bands; red; yellow; blue; orange; purple; green. In the center is a black glossy blob.”

GPT-3

Wall Drawing #901 - GPT-3 output

GPT-4

Wall Drawing #901 - GPT-4 output

Just when you think things are really hotting up, GPT-4 attempts to make a circle look like a “glossy blob” by doing this.

I gave it another chance with “try again but use Perlin noise to draw the blob more blobby”. It did this considerably better than GPT-3 (which just used one Perlin noise call to pick the width of the circle and produced essentially the same output as above) but still quite badly.

GPT-4

Wall Drawing #901 - GPT-4 output v2

WALL DRAWING #1180

“Within a four-meter (160”) circle, draw 10,000 black straight lines and 10,000 black not straight lines. All lines are randomly spaced and equally distributed.”

I changed this to -

“On a 800px canvas, within a 750px circle, draw 10,000 black straight lines and 10,000 black not straight lines. All lines are randomly spaced and equally distributed.”

GPT-3

Wall Drawing #1180 - GPT-3 output

GPT-4

Wall Drawing #1180 - GPT-4 output

Uhhhhhhhhhh, damn. Ok, this is very cool, although the reasons it’s cool are not really to do with GPT-4 following instructions well or writing good code.

The “3D graffiti globe” effect is a by-product of the fact that GPT-4 persists in not using noFill() when drawing wobbly lines. It also drew all the straight lines going straight through the centre of the circle, which created a zoom-blur effect. It did do a very nice job of ensuring all the lines stay inside the circle.

By playing with the numerical variables in the code, I produced these variations -

Wall Drawing #1180 - GPT-4 output

Wall Drawing #1180 - GPT-4 output

+ HUMAN

To me, the interesting thing about Sol LeWitt‘s instructions is seeing how the exact words are interpreted by different implementers. As such, I didn’t intend to ever give ChatGPT extra tips or hints to improve the results. However, it was sometimes impossible to resist, because even a simple additional instruction like “choose randomly” or “use Perlin noise” makes a huge difference and it’s obviously tempting try for the best aesthetic results possible.

Outside of the confines of the Sol LeWitt experiment, there is huge potential for using ChatGPT as a collaborator to create generative art (or anything else). This can be through providing more detailed instructions and iterating over results, or by using AI to get started and then editing the code ourselves.

I asked ChatGPT-4 to use Perlin noise in the #1180 prompt, and then edited the code myself, adjusting variables and adding colours, varied line weights and a few other features.

GPT-4 + Human

Wall Drawing #1180 WIP development - GPT-4 + human output

GPT-4 + Human

Wall Drawing #1180 WIP development - GPT-4 + human output

I’ve seen other GPT-4 experiments where some people in the comments are saying that the human shouldn’t “help” the AI by correcting its mistakes or nudging it towards better choices. I do get that opinion when it comes to testing out AI, although it’s up to the person doing the experiment to decide how they want to go about it. Really though, the best results still come from collaborating with AI.

There are things ChatGPT is good at, like interpreting some vague instructions and quickly writing code to get us going, with really minimal effort. And there are things that humans are good at, like looking at the results and thinking about what to do next, iterating on the results and finding ways to improve it aesthetically. These skillsets go well together, and can create a satisfying and productive workflow.

It’s very possible that AI will become much better at the currently human parts of the collab as well. Even if/when it does, that doesn’t stop us doing them. People still hand knit even though there are knitting machines, paint even though there are cameras, and walk when they have nowhere to go.